Apple has issued an urgent fix for a vulnerability in its SSL (Secure Sockets Layer) code, used to create secure connections to websites over Wi-Fi or other connections, for its iPhone, iPad and iPod Touch devices.

The Guardian’s product and service reviews are independent and are in no way influenced by any advertiser or commercial initiative. We will earn a commission from the retailer if you buy something through an affiliate link. Learn more.

The fix, which is available now for both iOS 6 and iOS 7, is for a flaw which appears to have been introduced in a code change made ahead of the launch of iOS 6.0.

The flaw also affects Mac computers running Mac OSX - for which there’s no fix announced yet, although Apple says one is “coming soon”. Update: the fix is included in OSX 10.9.2, which became available on Tuesday. There is also an update for Mountain Lion, though it’s not clear why as that wasn’t thought to be affected.

The bug, and its discovery, raise a number of questions. Here’s what we do know, what we don’t know, and what we hope to know. (Apple declined to comment in relation to a number of questions we put about the vulnerability.)

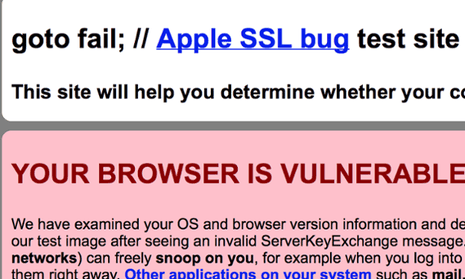

How can I check whether I’m vulnerable?

Go to gotofail.com and see what message you get. If all is good you’ll get a green message. On an iOS device, you’ll get a warning to update. If you’re on a Mac, you’ll either get a green message (your system is safe) or a yellow message (in a safe browser pointing out that other apps could be vulnerable) or a red message (telling you to patch your browser).

What should I do?

If you use an iPhone, iPad or iPod Touch, update its operating software. Go to Settings -> General -> Software Update. For devices using iOS 7, update to iOS 7.0.6; for devices on iOS 6 which can’t be updated to iOS 7 (the iPhone 3GS or iPod Touch 4G), update to 6.1.6.

Note that Apple isn’t offering an update to iOS 6 for devices which can be updated to iOS 7 (iPhone 4, iPad 2, etc). For those, your only options are to update, or live dangerously.

If you’re using a Mac on an older OS version, ie 10.8 (”Mountain Lion”) or earlier, you’re safe.

If you’re using a Mac on the newest OS, 10.9 (aka “Mavericks”), don’t use Safari to connect to secure websites until there’s an update. Use Mozilla’s Firefox or Google’s Chrome: they use their own code for connecting to secure websites. No bug has been found in that.

What did the bug do?

In theory (and perhaps, depending on who knew about it, in practice) it could allow your connections to secure sites to be spied on and/or your login details captured. Updating the software prevents that. The bug affected the SSL/TLS encrypted connection to remote sites.

What’s the importance of SSL/TLS?

When your device (handheld or PC) connects to a website using the SSL/TLS (Secure Sockets Layer/Transport Layer Security) method, the site presents a cryptographic “certificate” chain identifying itself and the authority which issued the certificate. Your device already has a list of issuing authorities which are trusted, and it will check the name of the site and the certificate it presents with that authority.

It’s a four-step process:

Site presents certificate chain

Your device checks site’s certificate matches name of site you’re on

Your device verifies that certificate comes from valid issuing authority

Your browser verifies that certificate chain signature matches the site’s public key.

(There’s also a guide to how it works at the gotofail.com FAQ.)

In theory, a certificate that has the wrong name for the site, or which hasn’t been issued by the authority, or which is out of date, won’t be trusted. At this point you’ll get a warning in your browser telling you that there’s something wrong and that you shouldn’t proceed or your data could be at risk.

A faked certificate could mean that the site you’re connecting to is actually being run by someone who wants to collect your user login details - as has happened in Iran. In 2011 the government there is reckoned to have used a certificate issued from a subverted certificated authority to set up a site which (through DNS diversion) could pretend to be Google’s gmail.com - and captured the data from dissidents who thought they were logging into the site.

So it’s important that your device can authenticate SSL certificates correctly. Sometimes you will come across sites where you get a certificate warning but which say you should trust it (for example because it’s a subsidiary with a different name from the one which owns the certificate). Be wary. Don’t approve certificates without being cautious.

What was the bug?

Due to a singe repeated line of code in an Apple library, almost any attempt to verify a certificate on a site would succeed - whether or not the certificate’s signature was valid. It would only throw an error if the certificate itself was invalid (due to being out of date, for example).

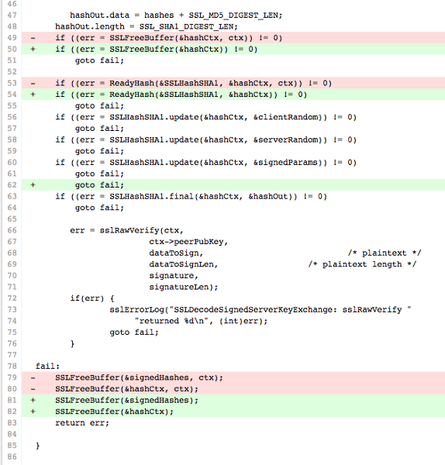

The bug is in the code below: it’s the second “goto fail;”, and would be carried out in every circumstance.

static OSStatus

SSLVerifySignedServerKeyExchange(SSLContext *ctx, bool isRsa, SSLBuffer signedParams,

uint8_t *signature, UInt16 signatureLen)

{

OSStatus err;

...

if ((err = SSLHashSHA1.update(&hashCtx, &serverRandom)) != 0)

goto fail;

if ((err = SSLHashSHA1.update(&hashCtx, &signedParams)) != 0)

goto fail;

goto fail;

if ((err = SSLHashSHA1.final(&hashCtx, &hashOut)) != 0)

goto fail;

...

fail:

SSLFreeBuffer(&signedHashes);

SSLFreeBuffer(&hashCtx);

return err;

}

When did the bug appear?

released in May 2012latest versions of iOS 7.1The diff of the two code versions shows the addition of the extra statement at line 62:

(The red lines are removed in the updated code; the green lines are added.)

It’s not an obvious difference unless you’re looking for it; in that case, it stands out like a sore thumb to any programmer.

How did the bug get there?

Arguing against the conspiracy plan is the fact that Apple publishes this code on its open source page. On the basis that “many eyes make bugs shallow”, and that the whole internet has been able to look at this epic “goto fail” for ages. If the NSA puts its secret backdoors out in the open like this, you’d expect them to be found a lot faster.

Arguing for nefarious conspiracy is - well, not very much. Apple should have found it, but didn’t; either of its compilers (GCC and Clang) should have thrown an error, but testing by others has shown that it doesn’t unless you have a particular warning flag (for “unreachable code”) set. A compiler which pointed to “unreachable code” (that is, a segment of code which will never be activated because it lies beneath a code diversion that always applies) would have caught it.

One former Apple staffer who worked on Mac OSX told the Guardian it’s “extremely unlikely, at least under normal circumstances” that the flaw was added maliciously. “There are very few people on any given team at Apple, and so getting changes lost in the shuffle would be difficult. The flip side is that there aren’t strong internal consistency/integrity checks on the codebase, so if someone were clever enough to subvert normal processes there would be easy ways in.”

But, the programmer added, “this change does not specifically stand out as malicious in nature.” The way it almost certainly happened is that it was a copy/paste error, or a merge issue (between two branches of code) that went unnoticed - “two similar changes could cause a conflict” (where the code has an illogical flow) “and in resolving this conflict an engineer might have made a mistake.”

What do programmers say?

One former Apple employee who worked on Mac OSX, including shipping updates and security updates, told the Guardian that Apple will be able to identify who did the code checkin which created the bug. “While source code management is on a team-by-team basis (there’s no company-wide policy), nearly every team uses some system (Git or SVN) that would be able to track commits [changes to code which are “committed” for use] and assign blame.”

The most likely explanation is that it occurred through the merging of two branches of code (where two or more people were working on the segment of code). Code merging is completely common in professional programming; reconciling conflicts between separate branches tends to be done by hand, using editors which will show up “diffs” (differences) between the old, new, and alternative new code.

Adam Langley, who works on security for Google’s Chrome browser (but hasn’t worked for Apple), says:

This sort of subtle bug deep in the code is a nightmare. I believe that it’s just a mistake and I feel very bad for whomever might have slipped in an editor and created it.

The author of the gotofail.com site thinks otherwise:

It is hard for me to believe that the second “goto fail;” was inserted accidently given that there were no other changes within a few lines of it. In my opinion, the bug is too easy to exploit for it to have been an NSA plant. My speculation is that someone put it in on purpose so they (or their buddy) could sell it.

(By “sell” he’s referring to the fact that you can now sell “zero-day exploits” to nation states and security companies - garnering up to half a million dollars or perhaps more.)

The fact that Apple is fixing the hole now suggests that it isn’t NSA-inspired. Though as John Gruber has pointed out, it’s perfectly possible that the NSA discovered this hole when iOS 6 was released and knew that it could exploit it.

One peculiar fact: if the NSA was aware of this security hole, it doesn’t seem to have told the US Department of Defense, which passed iOS 6 for use in government in May 2013.

When did Apple find the bug?

CVE, the Common Vulnerabilities and Errors databaseApple seems then to have begun working on the fix and how to roll it out. What’s odd is that despite finding the vulnerability then, it didn’t fix it in two beta versions of iOS 7.1 that were released after that time. One possibility - though the company won’t confirm this - is that it discovered the failure to authenticate the certificate in January, but took until now to narrow down the faulty piece of code - although given how quickly it took the rest of the web to do the same (a matter of a few hours) this seems unlikely.

Why didn’t Apple spot the bug sooner?

unit testingregression testingstatic code analysis

How did Apple find the bug?

claims to have cracked SSLBut Paget (who has just started working for Tesla) has unleashed a blistering attack on Apple on her blog for releasing the fix for iOS but not also patching the desktop at the same time:

WHAT THE EVER LOVING F**K, APPLE??!?!! Did you seriously just use one of your platforms to drop an SSL 0day on your other platform? As I sit here on my mac I’m vulnerable to this and there’s nothing I can do, because you couldn’t release a patch for both platforms at the same time? You do know there’s a bunch of live, working exploits for this out in the wild right now, right?

As she points out, Apple’s own security update system uses SSL - so might that be hacked by a “man in the middle” attack to plant malware?

How about your update system itself – is that vulnerable?

Come the hell on, Apple. You just dropped an ugly 0day [zero-day vulnerability] on us and then went home for the weekend – goto fail indeed.

FIX. YOUR. SHIT.

Why didn’t Apple fix the bug for Mac OSX at the same time as it did for iOS?

It certainly should have, as Paget points out. Another former Apple staffer agrees; apparently Apple decided instead to roll out the fix for Mavericks as part of its 10.9.2 software update rather than as a separate security update (which it has done before). But other bugs were found in 10.9.2, delaying its release - and reducing the security of Mac users. (As noted above, the fix for OSX was rolled out on Tuesday, four days after that for iOS.)

Have there been mistakes like this before?

Mistakes abound in software - and encryption mistakes are surprisingly easy to make. In May 2011, a security researcher pointed out that WhatsApp user accounts could be hijacked because they weren’t encrypted at all; until September 2011, there was a flaw in the same app which let people send forged messages pretending to be from anyone.

A common mistake is the use of default logins which are left active: millions of routers around the world have default user IDs and passwords (often “admin” and “admin”) which can be exploited by hackers.

A similar coding mistake bricked Microsoft’s Zune on the last day of 2008 - which, as luck would have it, was a leap year. There was a coding mistake in the software for the timer chip which wouldn’t allow it to display a date equivalent to the 366th day of the year.

Apple has also made some similar egregious errors - notably in having alarms which didn’t adjust when daylight savings time did, and so kept waking people up either an hour early or late.

Google too had an error in version 4.2.0 of its Android software: you couldn’t add the birthdays of people born in December to their contacts, because that month wasn’t included.

More seriously, versions of Google’s Android up to version 4.2 could see connections made over open Wi-Fi hijacked and malicious code injected, UK-based MWR Labs said in September 2013. It’s unclear whether that has yet been patched; millions of Android devices are still using versions below 4.2. Ars Technica, reporting that flaw, noted that “while the weakness can largely be prevented in Android 4.2, users are protected only if developers of each app follow best practices.”

And Microsoft had a long-running flaw in Windows 95 and Windows 98 which meant that if your computer had been running continuously for just under 50 days, it would suddenly hang - and you’d have to reboot it. Why? Because it measured “uptime” using a 32-bit register, which incremented every millisecond. After 2^32 milliseconds (aka 49 days and 17 hours), the register was all 1s - and the only way to reset it was turning the power off. That’s quite aside from the many flaws exploited in its ActiveX software to install malicious programs on Windows machines in so-called “drive-by installations”.

What lessons are there from this?

In the words of Arie Van Deursen, professor in software engineering at Delft University of Technology in the Netherlands,

When first seeing this code, I was once again caught by how incredibly brittle programming is. Just adding a single line of code can bring a system to its knees.

Not only that - but the flawed code has been both used and published for 18 months, and tested by a government security organisation which passed it for use. Software bugs can be pernicious - and they can lurk in the most essential areas. And even companies which have been writing software for decades can fall foul of them.

But the former staffer at Apple says that unless the company introduces better testing regimes - static code analysis, unit testing, regression testing - “I’m not surprised by this… it will only be a matter of time until another bomb like this hits.” The only - minimal - comfort: “I doubt it is malicious.”

Comments (…)

Sign in or create your Guardian account to join the discussion